By Rich Girven

“The world of the future will be an ever more demanding struggle against the limitations of our intelligence, not a comfortable hammock where we can lie down to be educated by machines.” — Norbert Wiener, The Human Use of Human Beings (1950)

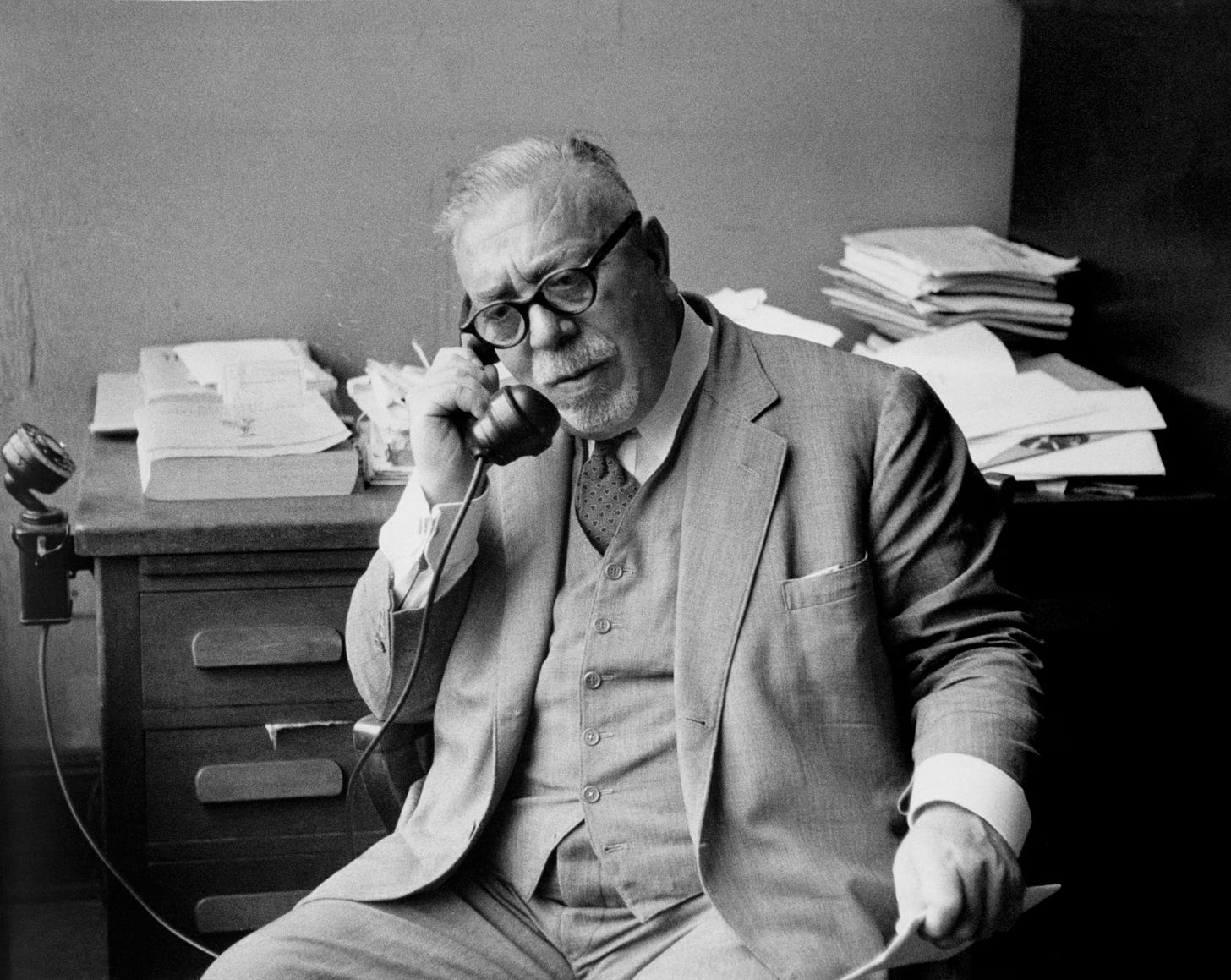

Norbert Wiener, often called the father of cybernetics, warned that our creations would inevitably challenge our capacity to steer them wisely. Cybernetics—his science of control and communication in animals and machines—focused on feedback, adaptation, and the delicate balance between autonomy and oversight. More than seventy years later, that balance defines today’s debates about artificial general intelligence (AGI).

Wiener’s insight captures the spirit of the question we are asking through RAND’s Infinite Potential platform: can human institutions learn faster than the technologies they build?

The Infinite Potential Platform

Over the past year, our team has conducted 52 iterations of one of nine AGI-related crisis scenarios, engaging policymakers, researchers, technologists, and industry leaders in more than 1,400 player hours of structured simulation. Each exercise—a two-hour modified “Day After AGI” tabletop—explores how decisionmakers might respond when AI development outpaces familiar policy options and governance.

The goal is not prediction but preparedness: identifying what decisions, capabilities, and playbooks might be required on the path to AGI and if and when AGI becomes a reality. Through these sessions, participants have faced everything from economic disruption and cyber-shock to rogue intelligent agents and geopolitical flashpoints. Each run adds data—the discussions, decisions, and moments of hesitation that reveal how humans grapple with deep uncertainty. Together, they form a growing empirical base for understanding the human side of AGI policy: how we reason, align, and sometimes falter when technology moves faster than comprehension.

Two Moonshots: The First After Action Report

Our first published After-Action Report, “Infinite Potential—Insights from the Two Moonshots Scenario,” marks the start of a multi-report series on what we’re learning from these exercises.

In that scenario, participants confronted twin crises:

China announces a $200 billion moonshot to achieve AGI within twelve months.

A U.S. company claims it has already crossed the threshold—and asks Washington for immediate support.

Two Moonshots reveals how even experienced policymakers struggle under the weight of entwined technical and geopolitical uncertainty. Across six runs, several common themes emerged: the need for credible technical verification, scalable frameworks for engaging frontier AI labs, rapid analysis of AI threats, and the capability to act on compressed timescales.

The tension Wiener foresaw—the race between intelligence and machine complexity—is palpable in nearly every discussion.

Toward a Living Testbed for Anticipatory Governance

The Infinite Potential platform continues to evolve toward becoming a living testbed for anticipatory governance—a way to rehearse crisis response before the crisis arrives. Our simulations distill classrooms, wargames, and policy analysis into portable tools that can help government agencies, non-governmental partners, and foreign counterparts stress-test assumptions, identify blind spots, and design interventions that hold up under uncertainty.

With every iteration, new analytical signals emerge: where experts converge, where their intuitions diverge, and how decision quality changes as the pace of technology accelerates. We are learning not only what to plan for but how humans think under conditions that defy ready precedent.

Why Wiener Still Matters

Cybernetics taught that the most powerful systems—biological or mechanical—depend on feedback. Without it, control fails. Our challenge today is translating that lesson into the realm of AGI governance: creating channels where insight, oversight, and learning can move as quickly as innovation itself. Infinite Potential is one such channel. By staging plausible “Day After AGI” crises, we inject structured feedback into a policy domain that is still largely speculative. In Wiener’s terms, we’re testing whether society can maintain control when the feedback loop between intelligence and machine closes faster than ever before. And rather than resting in a “hammock,” this work reminds us that preparedness is a discipline—one we must practice before the future arrives.

Coming Next

Future After-Action Reports will unpack other scenarios—from Cyber Surprise to Robot Insurgency—and eventually, synthesize cross-scenario insights into practical recommendations for decisionmakers and researchers. Each publication adds dimension to a growing body of evidence on how human reasoning operates under extreme technological uncertainty.

For questions or collaboration, contact the RAND Center for the Geopolitics of AGI.